Do applied programming languages research at Jane Street!

As our Tools & Compilers team has grown, the kinds of projects we work on has become more ambitious. Here are some of the major...

As our Tools & Compilers team has grown, the kinds of projects we work on has become more ambitious. Here are some of the major...

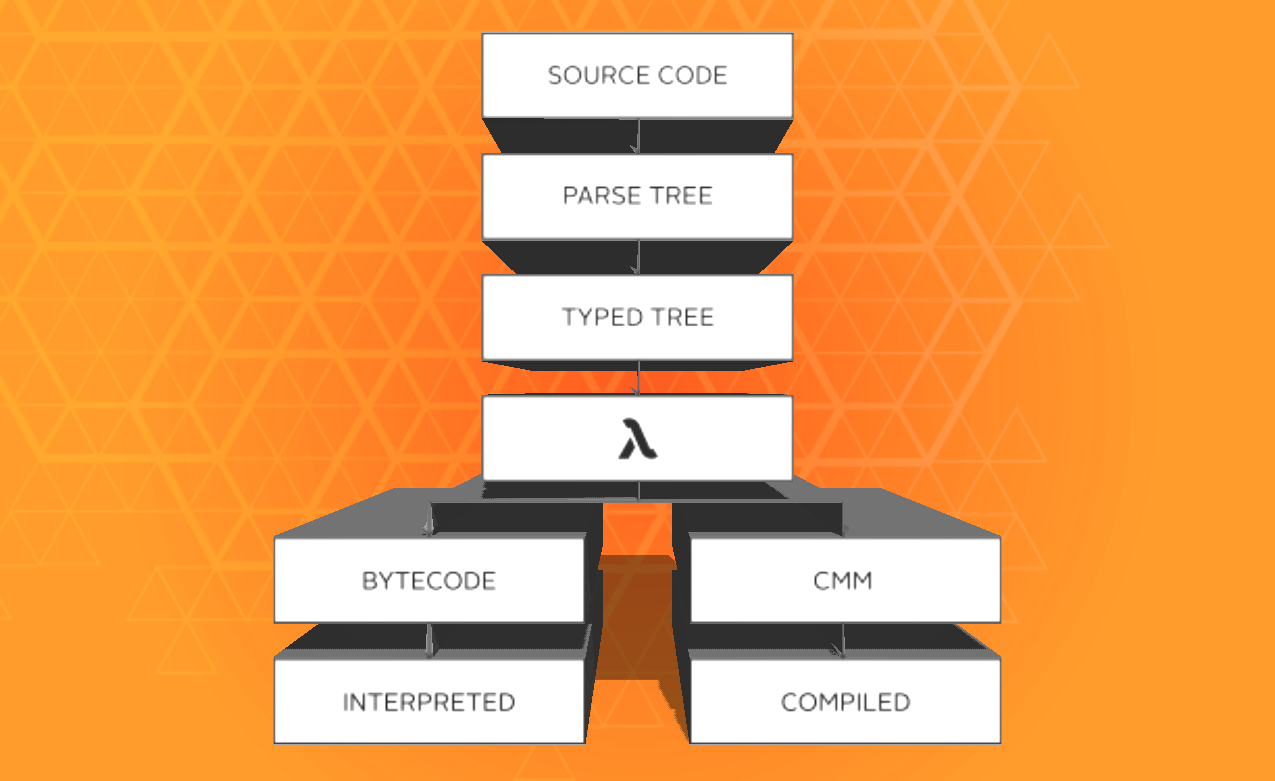

Now that OCaml 4.08 has been released, let’s have a look at what was accomplished, with a particular focus on how our plans for 4.08...

Welcome to another post in our series of how to use OCaml for machine learning. In previous posts we’ve discussed artistic style-transfer and reinforcement learning....

At Jane Street, for the last several years, we have been increasingly interested in machine learning and its many use cases. This is why it...

If you haven’t heard of it, Depth First Learning is a wonderful resource for learning about machine learning.

Jane Street is sponsoring this year’s MakeMIT hackathon, and we wanted to create a prize for the winners that would do justice to the maker...

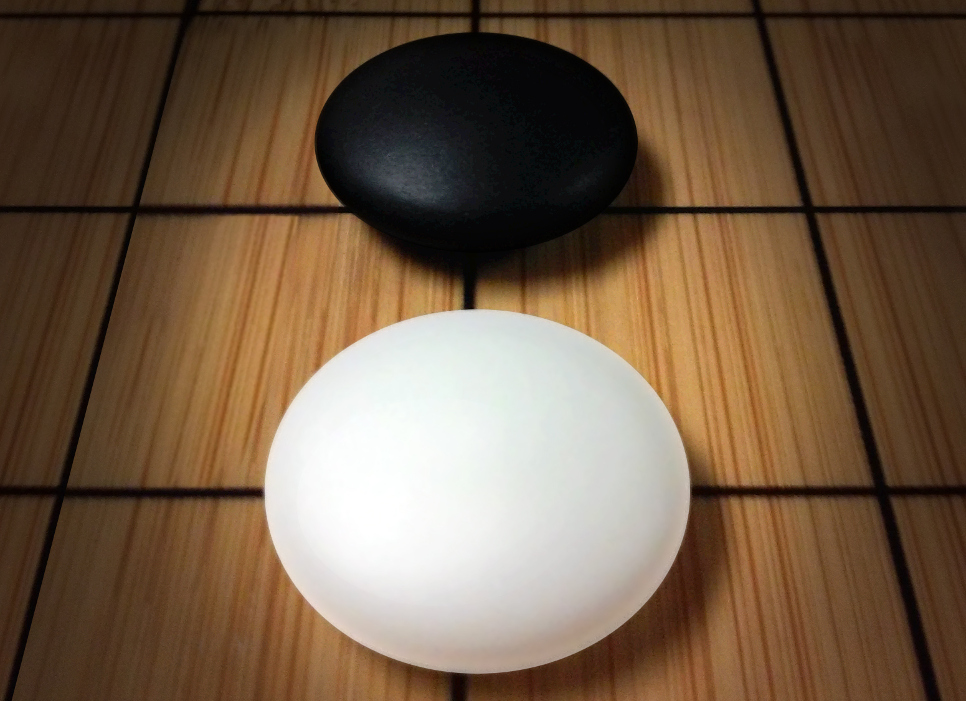

At Jane Street, over the last few years, we’ve been increasingly exploring machine learning to improve our models. Many of us are fascinated by the...

In a previous blog post we detailed how we used OCaml to reproduce some classical deep-learning results that would usually be implemented in Python. Here...

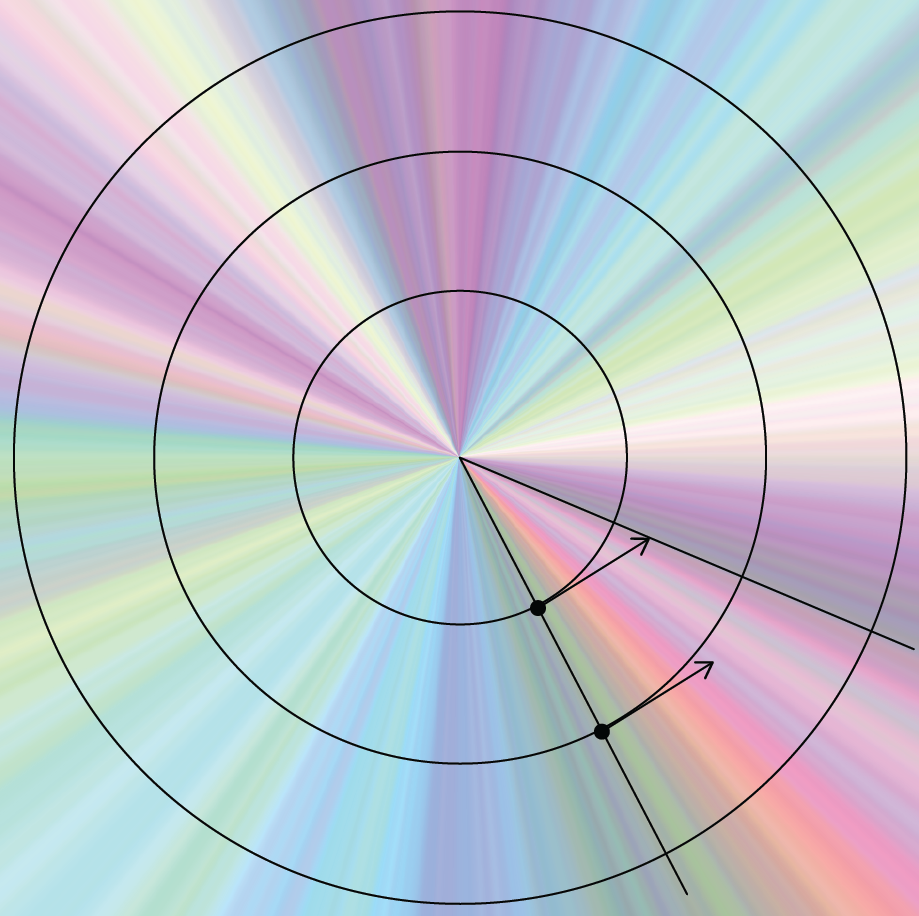

This blog post is about an interesting detail about machine learning that I came across as a researcher at Jane Street - that of the...

At Jane Street, our web UIs are built on top of an in-house framework called Incr_dom, modeled in part on React’s virtual DOM. Rendering different...

At Jane Street, we often work with data that has a very low signal-to-noise ratio, but fortunately we also have a lot of data. Where...

Last year we held a machine learning seminar in our London office, which was an opportunity to reproduce some classical deep learning results with a...

Yet again, intern season is coming to a close, and so it’s time to look back at what the interns have achieved in their short...

With the external release of OCaml 4.07.0 imminent, we in Jane Street’s Tools & Compilers group have been planning what we want to work on...

Expect tests are a technique I’ve written about before, but until recently, it’s been a little on the theoretical side. That’s because it’s been hard...

One of the joys of working at Jane Street for the last 15 or so years has been seeing how our software stack has grown...

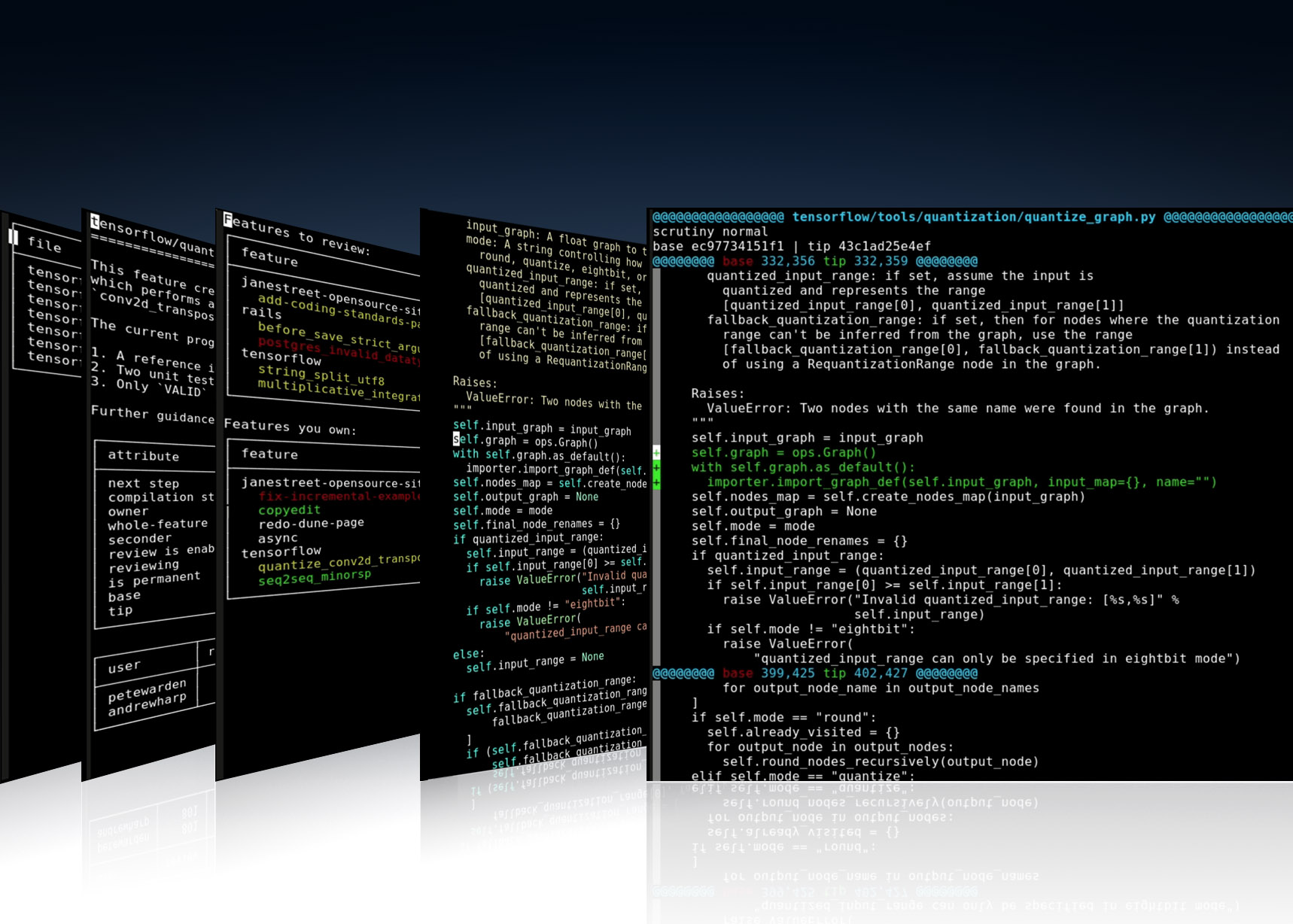

Imagine a system for editing and reviewing code where:

Interested in learning OCaml? In the NYC area? Then this might be for you!